The world is based on data in this generation, so information is the new coin. Today, we will explore what is data scraping. Businesses, researchers, and tech fans can get a lot of value from knowing how to access it well.

Data scraping, a process that extracts organized data from unstructured sources like websites, is a powerful tool that can enhance learning and facilitate informed decision-making.

Data scraping is not limited to a single field, but rather, it has a broad spectrum of uses, from tracking market trends to powering AI models.

However, with a lot of power comes a lot of duty because the practice often has moral and legal repercussions.

According to Flatrocktech, statistics highlight the growing impact of data scraping. Projects in 2023 collected half a million price quotes daily in the United States alone, far surpassing traditional data collection methods. This efficiency and scale underscore the importance of data scraping in today’s digital landscape, where extracting valuable insights from vast amounts of data is crucial for businesses and researchers alike.

This detailed guide will teach you everything you need to know about data scraping, including the best ways to use it, the possible problems it can cause, and how to ensure you’re following the rules.

It is suitable for beginners who want to learn the basics or experienced pros who want to know more advanced techniques.

What is Data Scraping?

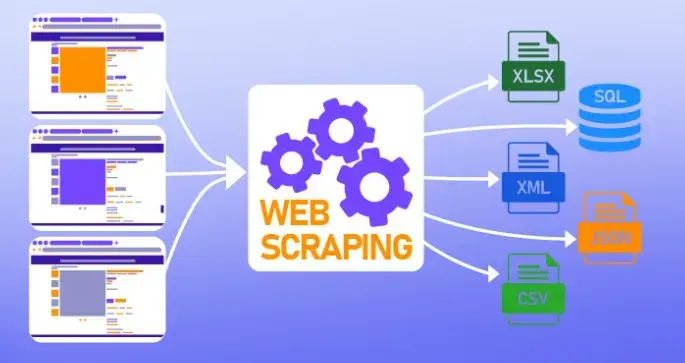

Data scraping is the process of getting organized data from sources that aren’t organized or are only partly organized.

Usually, this is done on websites, databases, or papers. It includes using automated tools or scripts to gather data and change it into a format that can be used, like a database or CSV file, so that it can be analyzed, researched, or added to other systems.

Data scraping is a powerful tool that can be used in many areas, from market research to competitive analysis, because it makes it easy to get big amounts of data quickly.

Importance and Applications of Data Scraping

The importance of data scraping lies in its ability to provide access to vast amounts of information that would otherwise be difficult or time-consuming to gather.

Businesses use data scraping to monitor competitor pricing, track market trends, and gather customer reviews, allowing them to make informed decisions and stay ahead of the competition.

Researchers and academics leverage data scraping to collect data for studies, surveys, and analysis, while developers use it to populate databases and enhance machine learning models.

In the digital age, where data is a critical asset, data scraping enables organizations to harness the full potential of available information.

Ethical Considerations and Legal Implications

While data scraping is a valuable tool, it comes with ethical and legal responsibilities.

Ethically, it is essential to respect websites’ terms of service, honor robots.txt files that indicate which parts of a site can be scraped, and avoid overloading servers with excessive requests.

Data scraping can be a gray area, as some jurisdictions have specific regulations regarding data usage and privacy.

Unauthorized scraping of copyrighted or proprietary content may lead to legal actions, including lawsuits for intellectual property infringement.

Therefore, it is crucial for anyone engaged in data scraping to understand and comply with both ethical standards and legal requirements to avoid potential consequences.

Understanding the Basics of Data Scraping

Scripts or special software make it possible to automatically pull data from websites, databases, and other places.

This is how data scraping works. Sending HTTP requests to a web server, getting a page’s HTML content, and then parsing this content to get the data that is needed are common steps in the process.

It is then cleaned up, organized, and saved so that it can be used or analyzed later. Data scraping works well because it can quickly and correctly gather a lot of information, which is much more than could be done by hand.

The data source, the scraper (also called a bot), and the analysis method are the most important parts of data scraping. The website or directory from which data is to be taken is called the data source.

A scraper is a tool or script that moves around the site, sends requests, and gathers data. It is the process of looking at raw HTML or other forms and pulling out useful data.

Standard parsing methods include HTML parsing, DOM parsing, and XPath queries. All of these parts must work together to ensure the data scraping method is accurate and effective.

Many different types of businesses use data scraping, and each one adapts the technology to meet its own needs. Companies that do business online use data scraping to monitor their competitors’ prices, find out what products are available, and get customer feedback.

In finance, scraping is used to obtain stock prices, financial records, and market news so that investors can make better choices. Researchers also use scraping to obtain large datasets from different sources that they can then study and analyze.

Media and marketing companies use data scraping to monitor brand mentions, follow social media trends, and assess material performance. In these fields, data scraping is now an important way to generate new ideas, plan strategies, and stay ahead of the competition.

Types of Data Scraping

1. Web Scraping

Web scraping is the process of automatically extracting data from websites.

It involves sending HTTP requests to a webpage, retrieving the HTML content, and then parsing this data to extract the information you need.

This technique is widely used because of the vast amount of publicly available data on the internet.

Common use cases for web scraping include price monitoring, where businesses track competitor pricing; content aggregation, where information from various sources is collected into a single platform; and market research, where companies gather data on trends, consumer behavior, and product performance.

2. Screen Scraping

Screen scraping, also known as UI scraping, is the technique of capturing data from the screen display of a computer rather than accessing the data directly from a website or database.

It mimics the actions of a human interacting with the interface, capturing the visual representation of the data. This method is often used when direct access to data is not possible, such as with legacy systems or applications that don’t offer an API.

Common use cases for screen scraping include:

- Automating repetitive tasks.

- Migrating data from old systems.

- Integrating data from applications without exposing their data structures.

3. Database Scraping

Database scraping refers to the extraction of data directly from databases using SQL queries or other database access methods.

This type of scraping is usually employed when you have authorized access to a database but need to automate the extraction of large volumes of data for analysis or transfer.

Common use cases for database scraping include data migration, where information is moved from one database to another; data warehousing, where data is collected from various sources into a central repository; and business intelligence, where scraped data is used to generate reports and insights.

4. Email Scraping

Email scraping is the practice of extracting email addresses and other contact information from websites, databases, or documents.

This technique is often used in lead generation, where businesses gather potential customer contact details for marketing campaigns.

However, it is also frequently used for data collection in customer relationship management (CRM) systems.

Common use cases for email scraping include:

- Compiling mailing lists for marketing.

- Gathering contact information for outreach campaigns.

- Integrating email data into CRM systems for better customer management.

While useful, email scraping must be conducted ethically and within the bounds of legal regulations, such as data privacy laws.

Data Scraping Techniques

1. HTML Parsing

HTML parsing involves extracting data from the HTML structure of web pages. HTML (Hypertext Markup Language) is the standard language used to create and design web pages, consisting of various elements such as tags, attributes, and nested structures.

Tools for HTML parsing, such as Beautiful Soup and lxml for Python, allow users to navigate and extract information from HTML documents by leveraging the hierarchical structure of tags.

These tools can parse the HTML content, allowing users to locate specific elements and attributes to retrieve the desired data.

2. DOM Parsing

DOM (Document Object Model) parsing refers to the process of extracting data from the structured representation of a web page’s content.

The DOM represents the document as a tree of objects, where each node corresponds to an HTML element, attribute, or text.

Techniques for DOM parsing involve navigating this tree-like structure to access and extract data. Tools like JavaScript’s DOM API or libraries such as jQuery provide methods to traverse and manipulate the DOM, making it possible to select, modify, or extract data from specific elements efficiently.

3. XPath and CSS Selectors

XPath (XML Path Language) is a query language used to navigate and select nodes from an XML or HTML document. It provides a way to specify paths to elements, allowing for precise data extraction.

CSS selectors are another method used to target HTML elements based on their attributes, classes, or relationships to other elements.

Both XPath and CSS selectors are integral for locating specific data within web pages. XPath is often used in conjunction with libraries like lxml, while CSS selectors are used with tools like Beautiful Soup or JavaScript frameworks for streamlined data extraction.

4. Regular Expressions

Regular expressions (regex) are sequences of characters that define search patterns, useful for matching and extracting specific data from text. They allow users to identify patterns such as email addresses, phone numbers, or other structured information within a larger dataset.

Basics of regular expressions include syntax elements like literals, quantifiers, and character classes.

Applying regular expressions in data scraping involves using them to filter and extract data from HTML content or text files based on predefined patterns, making it a versatile tool for data extraction tasks.

5. API-Based Scraping

API-based scraping involves using application programming interfaces (APIs) to access data from a web service or application.

APIs provide a structured way to request and receive data in formats such as JSON or XML, without needing to scrape the content directly from web pages.

Understanding APIs involves familiarizing oneself with endpoints, authentication methods, and data structures.

The advantages of API-based scraping include:

- More reliable and efficient data access.

- Compliance with the provider’s terms of service.

Reducing the risk of legal issues and server overloads.

6. Headless Browser Scraping

Headless browser scraping utilizes a web browser without a graphical user interface (GUI) to automate data extraction from web pages.

Headless browsers, such as Puppeteer or Selenium in headless mode, can render web pages and execute JavaScript, making them ideal for scraping dynamic content that relies on client-side scripting.

This technique is particularly useful when dealing with websites that require interaction or when scraping content generated dynamically through JavaScript.

7. Automated Data Extraction Tools

Automated data extraction tools streamline the process of collecting data from websites and other sources. These tools, such as Octoparse, ParseHub, and Diffbot, offer user-friendly interfaces and pre-built features for setting up and running scraping tasks.

They can handle complex data extraction scenarios, such as navigating multiple pages, dealing with AJAX content, and managing data storage.

Automated tools often come with features for scheduling, data transformation, and export, making them valuable for users who need to collect and manage large volumes of data efficiently.

Also Read These Articles:

Unlock the Power of Structured Data in SEO for 2024 to Improve Rankings

Tools for Data Scraping

1. Programming Languages for Data Scraping

Python: Python is a leading programming language for data scraping due to its simplicity and powerful libraries.

Libraries like Beautiful Soup and Scrapy are widely used for parsing HTML and XML content, making it easier to navigate and extract data.

Beautiful Soup is known for its ease of use and flexibility in handling various HTML structures, while Scrapy offers a comprehensive framework for building and managing web scraping projects, including handling requests, processing data, and exporting results.

JavaScript: JavaScript, particularly with Node.js, is also popular for data scraping, especially when dealing with dynamic content.

Libraries such as Puppeteer and Cheerio are commonly used. Puppeteer provides a headless browser environment, which allows for scraping dynamic web pages that rely on client-side JavaScript.

On the other hand, Cheerio is used for server-side HTML parsing, similar to Beautiful Soup in Python, and is valued for its performance in manipulating and traversing the DOM.

Other Languages: While Python and JavaScript are the most popular, other languages like Ruby and Java also offer data scraping capabilities. Ruby’s Nokogiri library and Java’s Jsoup are effective for parsing HTML.

Each language has its pros and cons; for instance, Python’s extensive libraries and community support make it a top choice, while Java and Ruby might be preferred in environments where these languages are already in use or where specific performance needs are required.

2. Popular Data Scraping Tools

Beautiful Soup: A Python library designed for parsing HTML and XML documents. It simplifies the process of navigating and extracting data from web pages by providing methods to search and modify the parse tree. Ideal for beginners due to its straightforward syntax and ease of use.

Scrapy: An open-source framework for Python that offers a full-featured approach to data scraping. It provides tools for sending requests, handling responses, managing data pipelines, and exporting data.

Scrapy is suited for large-scale scraping projects and can handle complex scenarios, including crawling multiple pages and following links.

Puppeteer: A Node.js library that provides a high-level API for controlling headless Chrome or Chromium browsers.

Puppeteer is particularly effective for scraping dynamic content generated by JavaScript, allowing for interaction with web pages, capturing screenshots, and generating PDFs.

Octoparse: A visual web scraping tool that allows users to build scraping tasks through a user-friendly interface without coding.

It offers features like scheduling, data cleaning, and cloud-based scraping, making it accessible for non-technical users and suitable for extracting data from complex websites.

ParseHub: Another visual scraping tool that supports data extraction from websites with dynamic content and JavaScript.

It provides a point-and-click interface for defining scraping rules and can handle pagination, AJAX, and multi-step processes. ParseHub is known for its ease of use and ability to handle complex scraping tasks.

3. Comparing Data Scraping Tools

When comparing data scraping tools, several key features should be considered, including ease of use, support for dynamic content, scalability, and integration capabilities.

Beautiful Soup is great for simplicity and ease of use but may require additional libraries for handling advanced scenarios. Scrapy excels in handling large-scale scraping projects and offers extensive features but has a steeper learning curve.

Puppeteer provides robust capabilities for dynamic content but requires JavaScript knowledge and a Node.js environment.

Octoparse and ParseHub are user-friendly, visual tools ideal for those without programming experience, though they may lack the flexibility of code-based solutions.

Each tool has its pros and cons:

- Beautiful Soup and Scrapy offer flexibility and power but require programming knowledge.

- Puppeteer is excellent for JavaScript-heavy sites but may be overkill for simpler tasks.

- Octoparse and ParseHub offer ease of use at the cost of some customization flexibility.

Choosing the right tool depends on the specific requirements of the scraping task, including the complexity of the data to be extracted and the user’s technical expertise.

How to Mitigate Web Scraping

Rate Limit User Requests: Implementing rate limits on user requests is a fundamental method to mitigate web scraping.

By controlling the number of requests a user can make within a given time frame, you can prevent excessive load on your server and reduce the risk of automated scraping tools overloading your site.

Rate limiting can be enforced through server configurations or application-level logic, and it helps in balancing the load among users while discouraging aggressive scraping activities.

Mitigate High-Volume Requesters with CAPTCHAs: CAPTCHAs (Completely Automated Public Turing tests to tell Computers and Humans Apart) are effective tools for distinguishing between human users and automated bots.

By requiring users to complete CAPTCHA challenges, such as identifying objects in images or solving simple puzzles, you can prevent high-volume requesters from accessing your site.

CAPTCHAs help deter scraping bots that lack the ability to solve these tests, reducing the risk of unauthorized data extraction.

Regularly Modify HTML Markup: Frequently changing your HTML markup can disrupt scraping scripts that rely on static page structures.

By altering element IDs, classes, and overall page layout, you can make it more difficult for scraping tools to target and extract data accurately.

Regular updates to your site’s HTML also help in maintaining a dynamic environment that is less predictable for automated scrapers, though this approach may require additional development resources and careful planning to avoid disrupting legitimate users.

Embed Content in Media Objects: Embedding content within media objects, such as images, PDFs, or videos, can be an effective way to protect data from being easily scraped.

Converting important text and data into non-text formats makes it more challenging for scraping tools to access and extract meaningful information.

This approach adds a layer of complexity for scrapers that rely on extracting text directly from HTML, although it may also impact the accessibility and user experience of your content.

Challenges in Data Scraping

1. Handling Anti-Scraping Mechanisms

Many websites employ anti-scraping mechanisms to protect their data from unauthorized extraction.

Standard anti-scraping techniques include IP blocking, which prevents repeated requests from the same IP address, and the use of CAPTCHAs, which require human interaction to verify authenticity.

Rate limiting is another strategy, which restricts the number of requests a user can make in a given timeframe. Bypassing these defenses often requires sophisticated methods, such as rotating IP addresses or using proxy services to mask the source of requests.

Advanced CAPTCHAs may require solving complex puzzles or using machine learning techniques to bypass, while rate limiting can be countered by implementing intelligent scraping strategies that mimic human browsing patterns.

2. Dealing with Dynamic Content

Scraping dynamic content presents a significant challenge, as many modern websites use JavaScript to render content on the client side.

Unlike static HTML, dynamic content is generated in real time, making it invisible to traditional scraping methods that only process server-rendered HTML.

To address this, web scrapers need to use tools like headless browsers or browser automation frameworks (e.g., Puppeteer, Selenium) that can execute JavaScript and render dynamic content before extraction.

Managing infinite scroll and AJAX (Asynchronous JavaScript and XML) is also complex, as data is often loaded incrementally. Scrapers must be designed to handle pagination or simulate user interactions to load additional content and ensure all relevant data is captured.

3. Data Quality and Cleaning

Ensuring data accuracy and quality is crucial in data scraping, as raw data often requires significant cleaning and validation. Scraped data can be inconsistent, incomplete, or contain errors due to variations in web page structure or data formatting.

Techniques for data cleaning include:

- Deduplication to remove duplicate entries.

- Normalization to standardize data formats.

- Validation to verify data accuracy against predefined rules.

Implementing robust data parsing and validation processes helps to ensure that the extracted data is reliable and usable, thereby improving the overall quality of the data collected through scraping.

Best Practices for Data Scraping

1. Ethical Data Scraping Practices

Ethical considerations are crucial in data scraping to ensure responsible use of data and compliance with legal and site-specific policies.

One fundamental practice is respecting the robots.txt file and the website’s Terms of Service, which outline the rules and restrictions for automated access to the site.

Adhering to these guidelines helps prevent legal issues and maintains good relationships with site owners.

Additionally, anonymizing scraping requests by using proxies or rotating IP addresses can help avoid detection and reduce the impact on the target website’s infrastructure.

This practice also helps protect the privacy of the scraper and avoids IP blacklisting.

2. Data Storage and Management

Effective data storage and management are essential for handling scraped data efficiently. Choosing the right database depends on the scale and complexity of the data.

For structured data, relational databases like MySQL or PostgreSQL are often suitable, while NoSQL databases like MongoDB or Elasticsearch may be preferred for unstructured or large-scale data.

Properly structuring and indexing scraped data is crucial for optimizing query performance and ensuring quick access to relevant information.

Implementing a well-organized schema and creating indexes on frequently queried fields can significantly enhance the efficiency of data retrieval and analysis.

3. Ensuring Scraping Efficiency

To maximize scraping efficiency, it is important to optimize code for performance and reliability. This includes writing efficient scraping scripts that minimize unnecessary requests and handle errors gracefully.

Techniques such as asynchronous processing and concurrency can improve the speed of data extraction and reduce overall scraping time.

Additionally, regularly monitoring and maintaining scraping scripts is necessary to address any issues that arise due to changes in website structure or anti-scraping measures.

Logging and alert systems can help track the performance of scraping tasks and quickly identify and resolve any problems that may impact data collection or quality.

Future of Data Scraping

1. Emerging Trends in Data Scraping

The future of data scraping is marked by several emerging trends that are reshaping how data is collected and utilized.

One key trend is the increasing use of machine learning and artificial intelligence to enhance scraping techniques, making them more adaptive and capable of handling complex data extraction tasks.

Additionally, the rise of big data technologies and cloud computing is enabling more scalable and efficient scraping processes, allowing for the handling of vast amounts of data with greater ease.

Advanced web scraping tools are also incorporating features like natural language processing and sentiment analysis to extract more meaningful insights from unstructured data.

2. Potential Impact of AI on Data Scraping

Artificial Intelligence (AI) is poised to significantly impact data scraping by introducing more sophisticated and automated methods for data extraction.

AI-driven tools can leverage natural language processing to better understand and extract data from diverse sources, including text-heavy or contextually complex webpages.

Machine learning algorithms can also improve the accuracy and efficiency of data scraping by learning from patterns and adapting to changes in website structures or anti-scraping measures.

This evolution will likely lead to more intelligent and autonomous scraping solutions that can handle dynamic content and provide deeper insights from the data.

3. The Role of Data Scraping in Big Data and Analytics

Data scraping is becoming increasingly integral to the fields of big data and analytics.

As organizations strive to leverage large volumes of data for strategic decision-making, data scraping provides a crucial method for acquiring external data that complements internal datasets.

The ability to scrape data from diverse sources, such as social media, forums, and news websites, enriches the analytical models and helps uncover trends and patterns that might not be visible from internal data alone.

Integrating scraped data with big data platforms allows businesses to achieve more comprehensive and actionable insights, driving innovation and informed decision-making in various sectors.

Conclusion: What Is Data Scraping

Data scraping is a powerful and versatile technique that has become integral to data collection and analysis across various industries.

In this guide, we’ve explored the fundamental aspects of data scraping, including its definition, importance, and applications, while also addressing ethical considerations and legal implications.

We delved into the basics of how data scraping works, the different types of scraping methods—such as web, screen, database, and email scraping—and the specific techniques used, including HTML parsing, DOM parsing, XPath, and more.

We examined the tools and programming languages commonly used in data scraping, such as Python and JavaScript, and highlighted popular scraping tools like Beautiful Soup, Scrapy, and Puppeteer.

Additionally, we discussed strategies to mitigate web scraping, the challenges associated with anti-scraping mechanisms, dynamic content, and data quality, and best practices for ethical scraping, data management, and efficiency.

As we look to the future, emerging trends and the impact of AI are set to further revolutionize data scraping, enhancing capabilities and integrating with big data and analytics for deeper insights.

While the potential of data scraping is immense, it is crucial to approach it with responsibility and adherence to ethical standards to ensure its benefits are maximized while respecting privacy and legal boundaries.

Data scraping is a dynamic field with evolving techniques and tools that offer valuable insights and competitive advantages.

Embracing best practices and staying informed about advancements will ensure that data scraping remains a robust and effective strategy for data-driven decision-making.

Frequently Asked Questions on What Is Data Scraping

Is Data Scraping Legal?

Data scraping's legality varies by jurisdiction and context. It is generally legal if done in compliance with terms of service, privacy policies, and applicable laws. However, scraping copyrighted or sensitive data without permission can lead to legal issues.

What are the Risks of Data Scraping?

The risks of data scraping include:

- Potential legal consequences.

- Violation of website terms of service.

- Data privacy breaches.

- Operational disruptions for the target site.

Can Data Scraping be Detected?

Yes, data scraping can be detected through various methods such as monitoring unusual traffic patterns, analyzing user-agent strings, and employing anti-scraping technologies like CAPTCHAs and IP blocking.

How to Choose the Right Data Scraping Tool?

Choose a data scraping tool based on factors like ease of use, support for the required scraping methods (e.g., HTML, API), scalability, customization options, and compatibility with your technical environment.

What are the Alternatives to Data Scraping?

Alternatives to data scraping include:

- Using APIs provided by data sources.

- Purchasing data from third-party providers.

- Leveraging public datasets available through open data initiatives.

Why Might a Business Use Web Scraping to Collect Data?

Businesses use web scraping to gather competitive intelligence, monitor market trends, collect pricing information, track customer reviews, and aggregate content from multiple sources for analysis and decision-making.

How to Prevent Data Scraping?

To prevent data scraping, implement measures such as rate limiting requests, using CAPTCHAs, regularly modifying HTML markup, embedding content in media objects, and employing IP blocking and user-agent validation.

Why is Data Scraping Illegal?

Data scraping can be illegal if it violates copyright laws, breaches terms of service, or infringes on data privacy regulations. Unauthorized access or misuse of proprietary data can also lead to legal challenges.

How are Marketers Using Data Scraping?

Marketers use data scraping to gather competitive intelligence, analyze customer sentiment, track industry trends, and collect leads and contact information. It helps in refining marketing strategies and targeting campaigns more effectively.